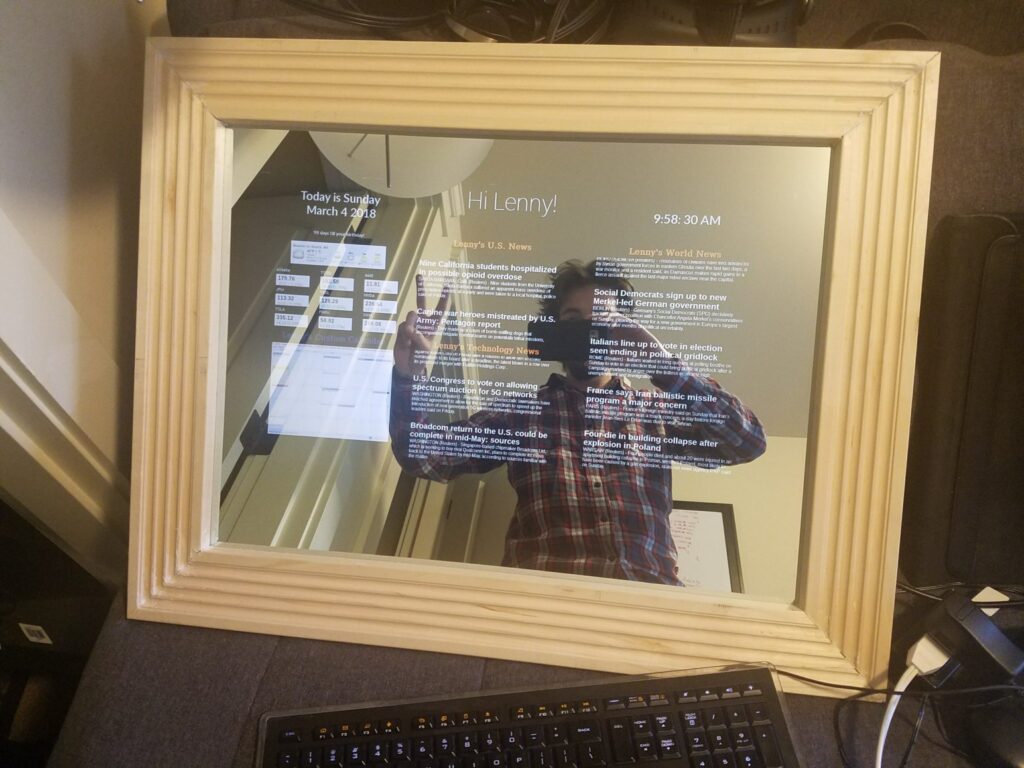

I completed this Smart Mirror in March of 2018 as my graduate project at Seattle University.

Skip to the very bottom for a full operational video of the device.

Introduction

I had always wanted to build a “Smart Mirror” but had not seen one that was able to seamlessly recognize its user in a privacy-conscious way and use this information to deliver custom content. Because such a device would most likely be present in a sensitive location (such as a bathroom) optical approaches to user recognition were not practical. Ease-of-use considerations prevented physical interaction with the device as well. For this reason, I investigated ways to recognize a user acoustically (i.e. learn a user’s “voice print”) and settled on a neural network to do image recognition based on spectrograms.

Voice-Recognition

Originally I had planned to do brute-force spectrogram analysis (counting fundamental frequencies and/or other attempts at manual pattern recognition) but realized, after a bit of research, that using a Convolutional Neural Network (CNN) to do image analysis would be a far better approach as it would allow me to skip manual feature extraction, thereby speeding up development time, and would most likely predict with higher accuracy.

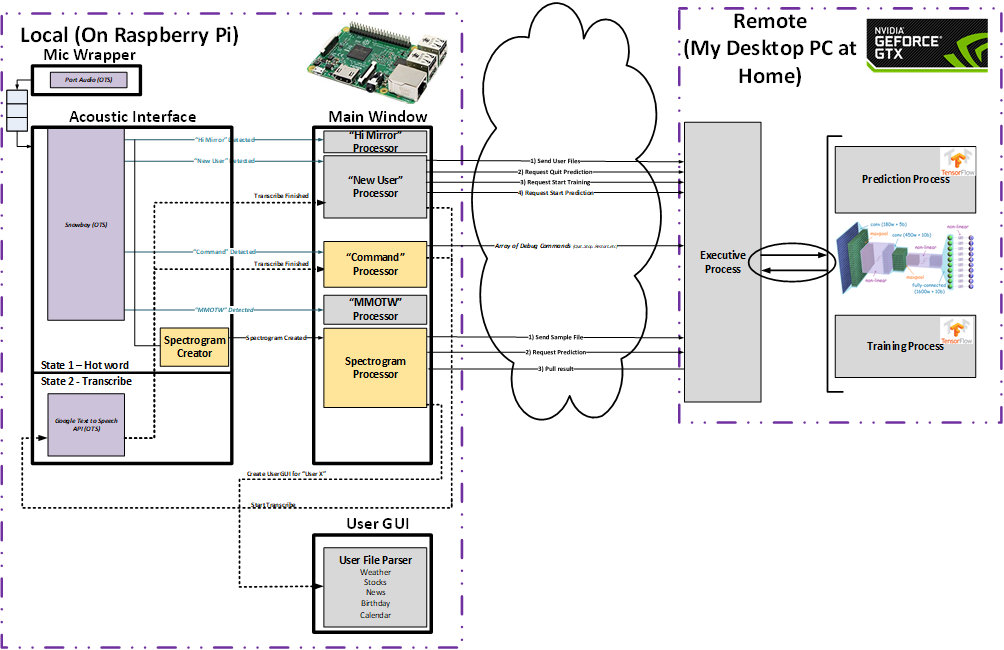

Distributed Architecture

Because the raspberry pi embedded into the device was not powerful enough to train a neural network in a practical amount of time (this was my first approach and training took many hours) the system was made distributed so that I could utilize the GPU in my desktop PC which is inherently well suited for neural network training. Thus, training the model went from ~9 hours to ~40 seconds. A block diagram of this final system is presented below.

After implementing the CNN using TFLearn and TensorFlow a user was able to simply utter the wake phrase (“Hi Mirror”) and the device accurately predicted who, out of its data base, was most-likely talking and displayed their custom information (i.e. their calendar, stocks, news, etc.) right on the mirror’s surface.

For further details on the system please see the following White Paper.

Project Milestones

PorAudio, Snowboy, and QT working together. This was the first major milestone, getting audio, snowboy, QT development environment all to compile and run together without issues.

Using SOX and Snowboy to isolate “Hi Mirror” and convert it into a spectrogram.

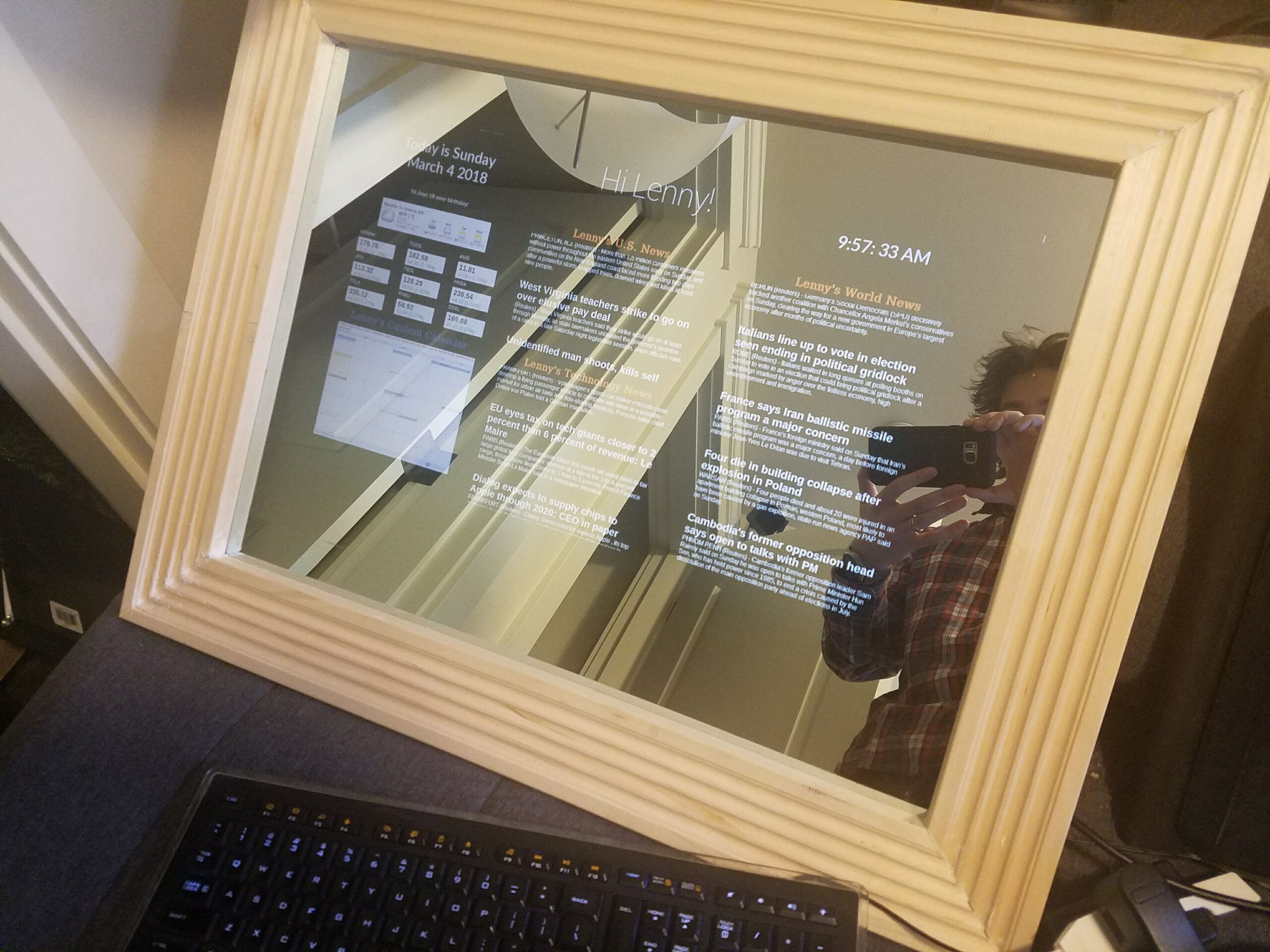

Converting to black-theme so that it look sbetter behind the 2-way glass.

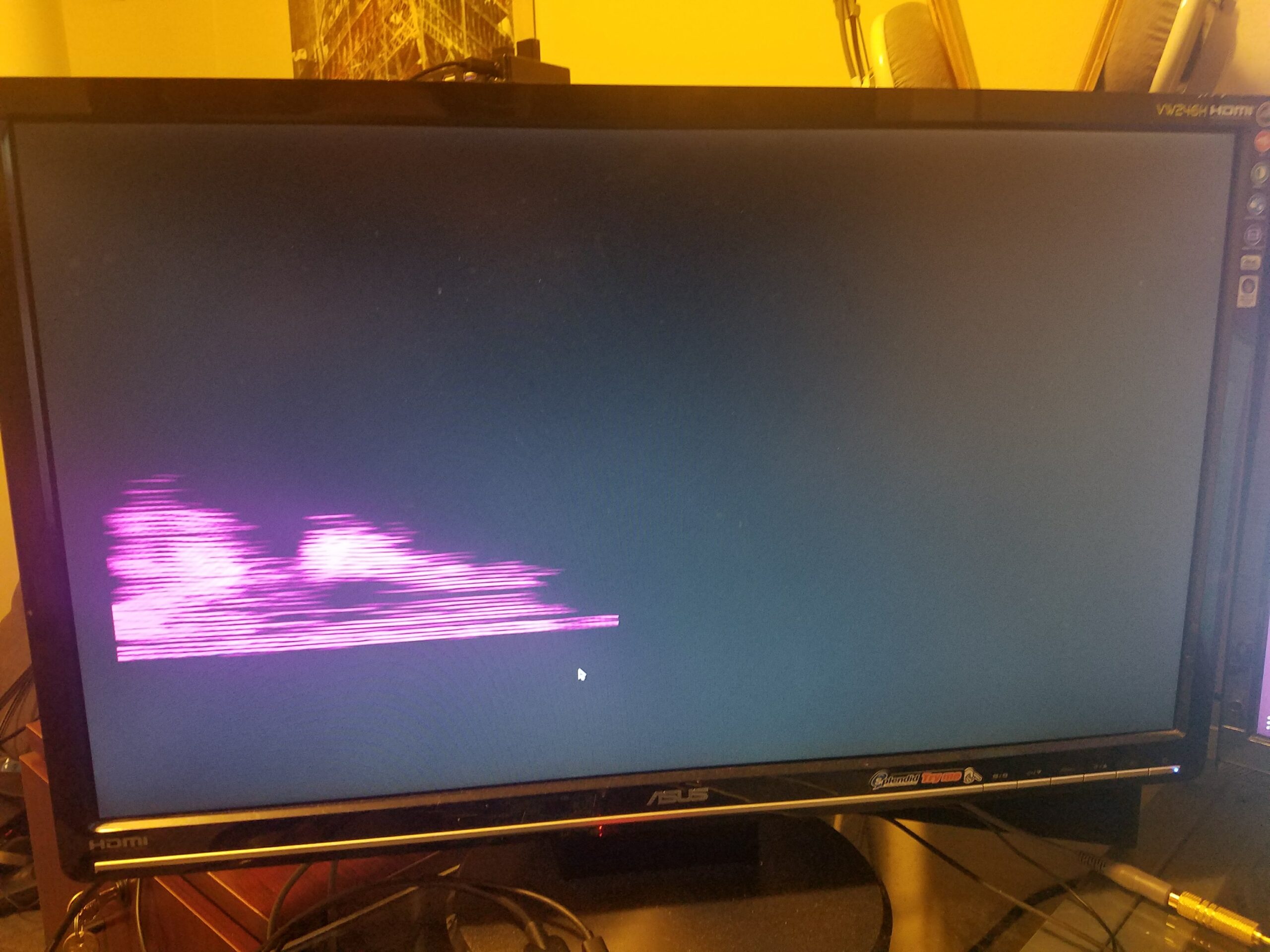

Porting CNN training to my desktop PC. The video below shows that using a GPU to train a neural net is way faster than the Raspberry Pi. Can you guess which screen is which?

Dedicated super-thin LCD monitor arrives. That light-bleed is unfortunate though. If money was not a concern, OLED would be the way to go here.

The two way glass arrives. Looks like background light has to be minimized as much as possible for the effect to work.

Time to get the hacksaw and build a custom frame

Playing with some home-screen ideas…

Sorry Jane a real-world test is the best way to work out the bugs

Presenting to Computer Science faculty and staff at Seattle University

Video of the Device

A full operational overview of the device is presented here. It is a bit long, but goes through the entire functionality from adding and training users to setting up a sample homepage.

If you’d like to skip around, here is a summary of the video sections:

00:00-00:45 —> Intro

00:45-02:30 —> Architecture

02:30-03:15 —> Remove existing users

03:15-07:00 —> Adding male and female users

07:00-07:50 —> Testing male and female users

07:50-09:50 —> Adding another male user

09:50-10:50 —> Testing all three users

10:50-11:45 —> Intro to home screen

11:45-15:00 —> Setting up a home screen for a user

15:00-16:05 —> Showing off the home screen

16:05- 17:18 —> Wrap-up and conclusion